Does Lowering an NVIS Antenna Improve It?

While there is not doubt that a very low antenna (below 0.05 wavelengths) can work for NVIS, I’ve read some accounts where lowering an antenna down to that range improved the signal-to-noise ratio, particularly in the presence of interference from distant thunderstorms. Personally, I’ve never experienced this effect for antennas at 1/8 wavelength or lower above the ground, but I might not have operated in those particular circumstances.

Note that these reports appear to only refer to improved reception, where signal-to-noise ratio is more important than raw gain. Most stations will use the same antenna for transmit and receive, so the corresponding loss of transmit signal strength at low heights must be considered as well, depending on the limiting factor for each station.

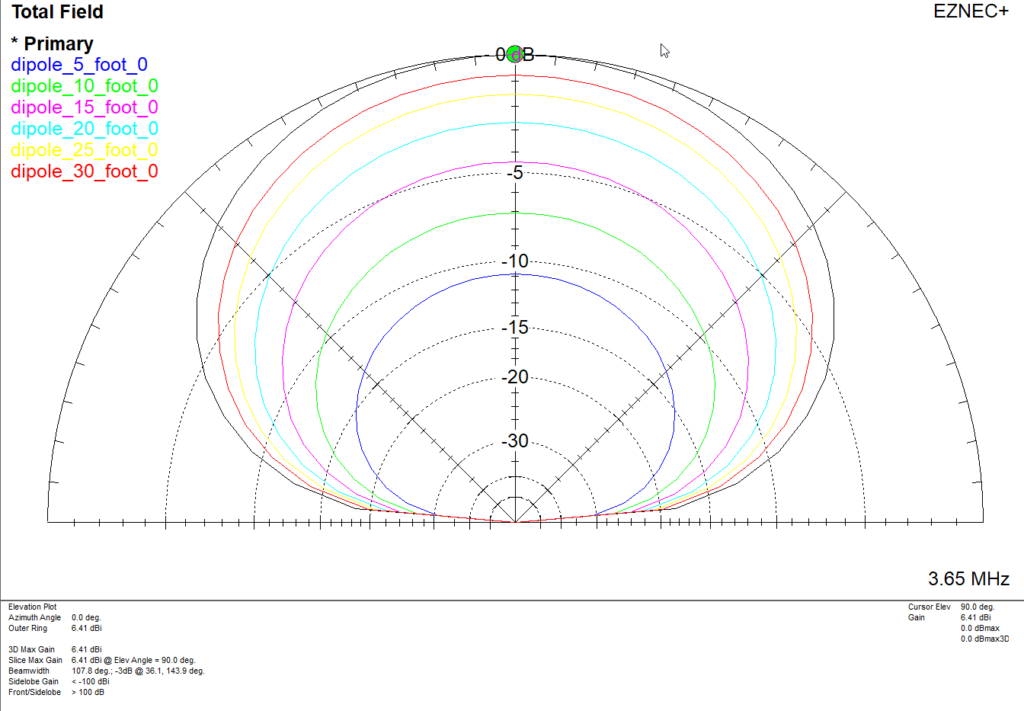

The usual explanation is that lowering a dipole reduces the pickup of low angle signals. However, this isn’t really the case: below 1/8 wavelength or so, the radiation pattern of a dipole antenna broadside to the wire remains relatively constant, but the overall strength drops as ground losses increase at low heights. The following plot shows the relative patterns of 80m dipoles from 9m (30 feet) down to 1.5m (5 feet) above the ground:

If we look compare the red trace for 9m (30 feet) to the dark blue trace for 1.5m (5 feet) in the vertical direction, we see there is about a 10 dB difference between them. Scaling along the 45 degree line gives about the same result. Looking at the very bottom of the plot, where the straight line portion represents a vertical angle of 5 degrees, we still see about a 10 dB difference. This means that the signal-to-noise ratio won’t change with antenna height over this range when the desired signal is at a high angle and the interference is at a low angle.

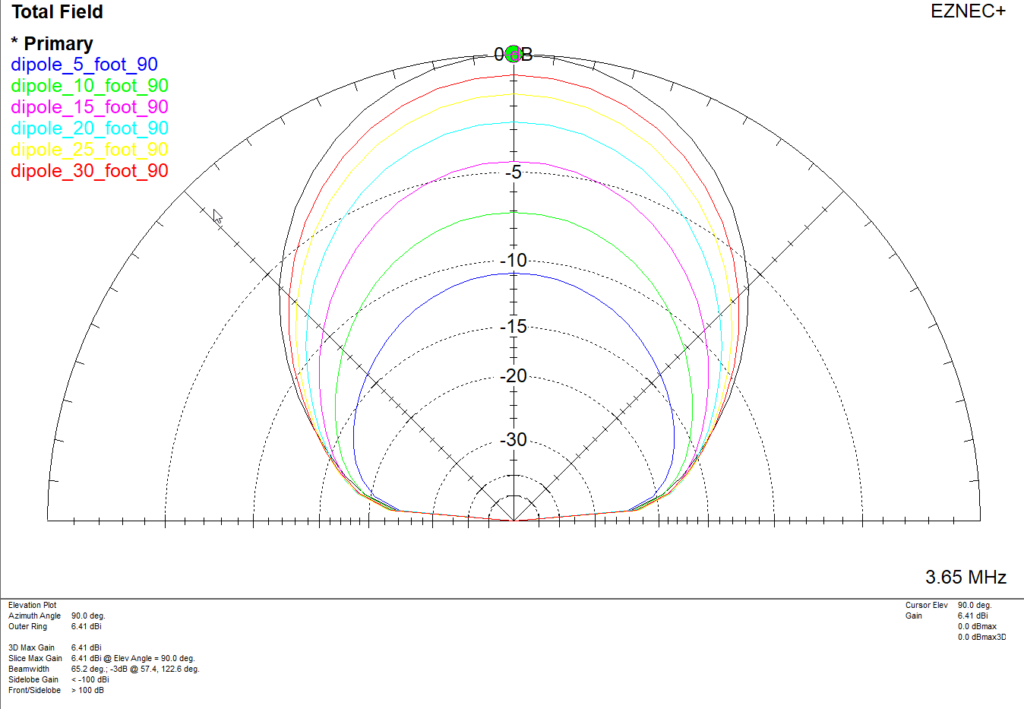

What about off the ends of the dipole? Here is the corresponding plot:

Of course, we still see about a 10 dB difference in signal strength between the red curve for 9m (30 feet) and the dark blue curve for 1.5m (5 feet) for signals arriving from overhead. But the difference is less than 9 dB at 45 degrees, and drops down to only 1 or 2 dB at very low angles. That would imply that the higher antenna would give a better signal-to-noise ratio in this case.

This is not to say that lowering the antenna might not help to improve the signal-to-noise ratio in some cases, only that the relative patterns of dipoles at low heights is not a good explanation.

What might cause this difference? I can think of a few possibilities:

- It may be more of an issue on 40m than on 80m. This effect would be more noticeable with antennas higher than 1/8 wavelength, which are probably more common on 40m. A discussion of antenna height in meters (or feet) without specifying the band can add a lot of confusion.

- The antenna doesn’t use an effective balun. Lowering the antenna reduces pickup of low angle vertically polarized signals on the feedline.

- Reducing overall signal strength (of both desired signal and noise) changes the behavior of the AGC circuit in the receiver. In that case, reducing the RF Gain control should give a similar improvement.

- When comparing two antennas, the relative orientation of each relative to the noise source (and the received polarization of the desired signal) will make a difference.

There very well may be other factors as well. I hope to run further tests at some point to try to reproduce this condition.